Context: AI-enabled cameras are becoming an increasingly important tool for the factory of the future. While the general aim of lean manufacturing is pretty clear (zero waste), strategies to achieve this are manifold. However, most of them start with a digital representation of the production processes, the products or even the whole factory. Some even call this a digital twin. Digital information needed for that concept may be produced by the industrial machines and appliances itself. However, the physical world of the factory — containing tools, manufactured goods or us human beings — will remain analog. Nowadays, cameras are expected to connect the physical world and its digital counterpart by transforming physical objects into useful data sources.

Why Should You Use 3D Vision?

Simply, because our world is 3D! 2D images only provide limited information. By dropping the third dimension, useful depth information is irretrievably lost. You see, when a 2D image of a spherical object (e.g. a ball) is captured, it is not projected as a circle. Conversely, no algorithm could retrieve the real ball from a circular shape.

Image shows 3D data captured with HemiStereo NX depth sensing camera. Since the Cartesian coordinates are stored together with the color information the viewers point-of-view can be changed afterwards.

Depth sensing cameras on the other hand are able to preserve the physical dimensions of an object by measuring all three dimensions of space. The algorithms are subsequently fed with cartesian coordinates [x, y, z] in addition to the color information. This allows the camera system to analyze and retrieve information more robustly.

Can 3D Cameras See Everything?

Not at all. 3D cameras are challenged by a vast number of problems, that highly depend on the technology used for spatial measurement. To name a few: transparent or reflective surfaces, structureless objects, repetitive patterns or very dark materials. That is why a number of different measuring principles arose on the market, providing specific pros and cons — at very different prices. An good overview of state-of-the-art 3D cameras can be found in this 3D camera survey.

Straight Lines Have to be Straight — Sure?

Every 3D sensing method is used in a distinct field of applications and therefore comes up with a dedicated set of optimal performance parameters. But all have one thing in common: A limited field of view (FOV) of up to 90° or up to 120° for some. The reason for this limitation is called perspective projection camera model. This mathematical model is used to describe most commercially used cameras. Its main feature is providing rectilinear constraint, which means straight lines in the real world will remain straight, when projected into an image. Deviations from this behavior are mostly denoted as distortion and should be removed. While this constraint undoubtedly makes things easier for certain applications, it also became the camera industry’s theme, ignoring the advantages of leaving it behind. Let me explain.

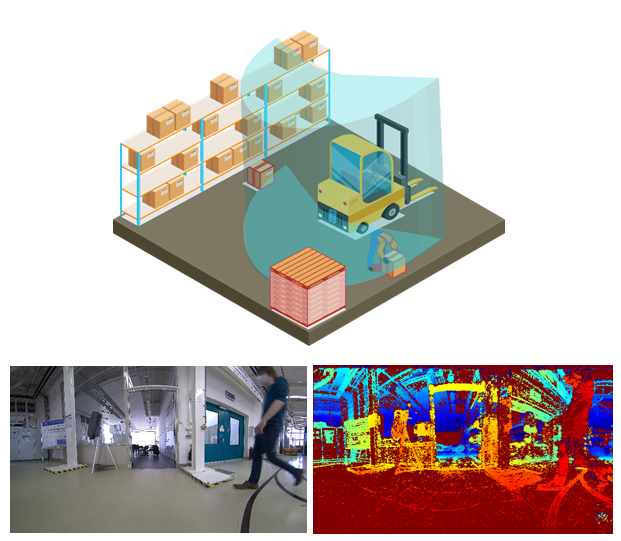

First let’s have a closer look at this issue with respect to an application field with growing importance in the automated factory of the future: Collision avoidance for fork lifters. A 3D camera might automatically monitor the vicinity and inform the driver if an obstacle or person intrudes the driveway. That is a good idea — in principle.

Limited field-of-view due to rectilinear projection.

However, a worker can easily be overlooked when the vehicle is reversed, as long as the rear-view camera system only perceives 90° of its backside. Judging only by this camera image, the vicinity seems clear and the assistance system has no chance to warn. This aspect may result in accidents or injury.

How to Overcome This Limitation? — HemiStereo®

We at 3dvisionlabs are convinced that 3D cameras should see more. But most camera’s FOVs are limited, which is a result of the underlying perspective camera models rectilinear constraint. By omitting this constraint we are free to use different lenses and projection models to describe the image formation. In the following example we employ fish-eye lenses with equiangular projection.

Let’s see what the math has to say about it: A (3D) cameras FOV is specified by the maximum incidence angle θ (“Theta”) that still leads to a valid image point on the camera’s sensor. The latter is characterized by a distance ⍴ (“Rho”) from the image sensors center. See the following sketch:

Note: Angle of incidence θ is measured from center to the line of incidence, while FOV is commonly defined from side-to-side. Hence FOV = 2 ⋅ θmax.

The relationship between these two variables is given by the underlying projection model:

Projection functions compared — For θ=90° tangent runs towards infinity.

And what do we learn from that? When comparing the plot of both projection formulas in the chart below, we can observe the following: When θ approaches 90° (FOV heads for 180°) the distance ⍴ heads towards infinity in perspective projection. In other words: To display 180° FOV with a common camera, it needs to be equipped with an infinitively large image sensor!

Alternatively: When using fish-eye lenses the relationship between θ and ⍴ is (approx.) linear. 180° FOV can be realized easily even on a very small sensor.

HemiStereo® NX employs this principle not only for 2D imaging but also for 3D measurement. Being equipped with two fish-eye lenses and a powerful processor the sensor overcomes the limitations of rectilinear 3D sensors — in real-time.

Illustration: How HemiStereo NX sees the world.

Coming back to the collision avoidance example above, we are now able to perceive obstacles and people in an 180° environment and warn the driver in case of imminent danger:

A full panoramic view with additional depth information now opens a broad horizon for new applications that see more than others. Examples include:

- Stable and high reliable SLAM based navigation for AGVs

- Indoor people tracking realized by one single 3d camera per room

- 3D mapping and reconstruction

Would you like to learn more about ultra-wide depth sensing? Then take a look at our latest white paper or watch our webinar on YouTube.