Navigating through unknown surroundings comes relatively easy for most humans. However, it is a highly complex task for autonomous mobile robots and automated guided vehicles (AGV). A sensor needs to simultaneously construct a map of its surroundings and find its location within this map. While this task presents a chicken-and-egg problem (a map is needed for the localization and a location is needed for the mapping), the most reliable solution is called Simultaneous Localization and Mapping (SLAM).

Its underlying algorithms are mainly divided into visual-based and lidar-based SLAM. While lidar-based approaches are less sensitive to lighting conditions, they usually have a very limited field-of-view. Visual (stereo-based) SLAM algorithms on the other hand are easier to use, cost less and are more robust against changes in the map, i.e. caused by objects being moved. Due to the fact that visual SLAM is based on a full camera and depth image of the scene, the same system can be used to create semantic information. Depending on the use-case scenario, being able to identify objects, e.g. by using Visual AI can be a great benefit for the system.

Visual SLAM (VSLAM)

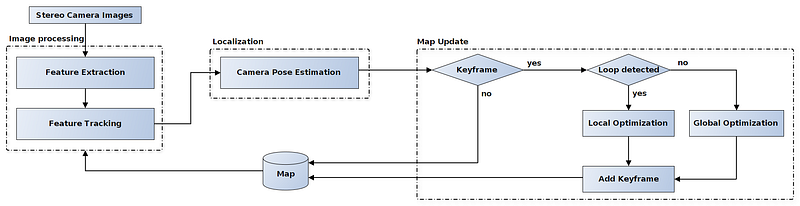

The basic principle behind VSLAM is the tracking of a certain set of image points, so-called features, through successive camera frames in order to triangulate the camera pose. Without going into too much detail, the processing steps of a visual SLAM algorithm are displayed in the following diagram.

Simplified processing steps of a visual SLAM algorithm

Demo Use Case: Automated Guided Vehicles (AGV)

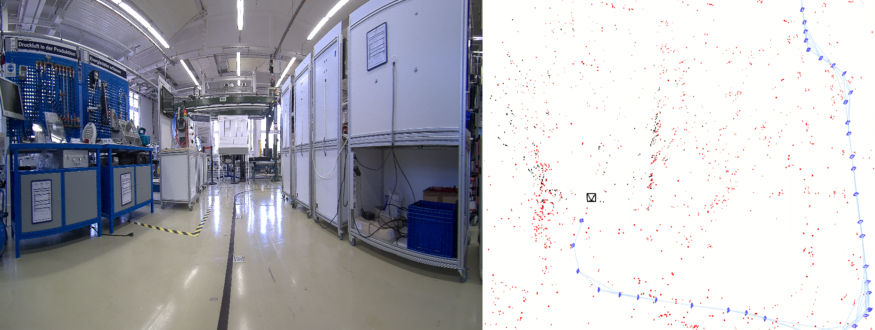

We were able to demonstrate our VSLAM application in a practical factory environment, which was kindly offered by our partner, Department of Factory Planning and Factory Management (FPL) at Chemnitz University of Technology. As shown below, HemiStereo® NX was mounted to a magnetic-tape guided AGV which moved through the factory environment. We ran the VSLAM software and automatically generated its virtual map.

our colleague Alex connects the sensor to the AGV

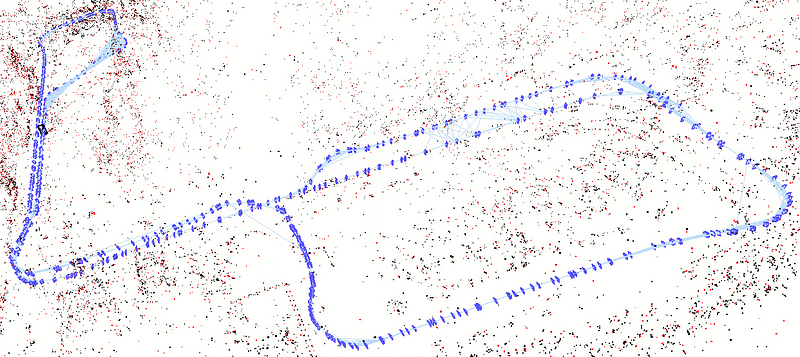

You can see the final map with the path of the AGV created by the sensor below. Since it was mounted in front of the AGV and the pivot point is approximately in the middle of the vehicle, the sensor swings out a bit in every curve. In terms of accuracy, you can even see this swing out in the final map.

Map created by the SLAM algorithm. Blue markers show the path of the AGV, red and black points represent mapped features

A video sequence of the live demo application is shown here.

Visual SLAM 💙 HemiStereo NX

With HemiStereo NX we’re able to push the limits of this already very sophisticated technology. HemiStereo® NX with its ultra-wide FOV of 180° is ideally suited to perform binocular VSLAM algorithms. The large coverage of the scene helps to detect and track visual features very robustly. While visual SLAM using monocular fish-eye lenses is already best practice, HemiStereo NX also introduces the ability to have precise depth data for the whole field-of-view, further improving the accuracy of the system.

If you want to test this for yourself, you can get your own HemiStereo NX here. A full guide on how to get VSLAM running on HemiStereo NX, can be found here.